AI and Pesak: Is ChatGPT Knowledgeable?

Here is my article for the Jewish Link for this Shabbos:

Before proceeding with this third installment regarding ChatGPT and pesak, let’s consolidate some ideas from the previous two articles (namely Can ChatGPT Pasken? Cheftza vs. Gavra and ChatGPT, Pesak Types, and Semantic Shift). In the cheftza approach (Rav Schachter), pesak is legitimate if it is accurate, regardless of who or what issues it. In the gavra approach (Rav Wiederblank), pesak is valid only if a posek issues it, and there may be qualifications (such as human, Jewish, religious, male, adult, intelligent) to be a posek.

Two qualities that are necessary but insufficient are that the person be gemir (knowledgeable of the relevant sources) and sevir (adept at reasoning). From the cheftza perspective, these are required to ensure an accurate conclusion. From the gavra perspective, perhaps this defines a posek’s competency and legitimacy. Alternatively – though Rav Wiederblank doesn’t maintain this – gemir and sevir can be aspects of intelligence, although not all humans attain that in the halachic realm. Then, any sufficiently intelligent entity, human or artificial, might render valid pesak.

Next, the word pesak is ambiguous, and can refer to (A) simple questions involving lookup like that an apple is ha’etz; (B) non-trivial questions which require comparing the case to existing law, apples to apples instead of to pomegranates, and understanding and applying the underlying principles that interact; and (C) novel and creative pesak which involves reanalysis of sugyot, and boldly deciding between halachic theories put forth by earlier scholars.

Type A is not really formal pesak, so from the cheftza perspective it must be accurate, while from the gavra perspective no pesak is necessary. In a shiur in 1984, “Klalei Hora’a – Laws of a Posek” (yutorah.org/lectures/753343) Rav Hershel Schachter distinguished between types of pesak, where a mid-level posek sticks with what I’d approximately label type B, while one with greater knowledge and ability sometimes has an obligation to engage in type C. That is, these three types of pesak require different levels of gemir and sevir.

Finally, some levels of constrained gemir are insufficient, for humans and machines. One might rule in a case where the question should be passed to a posek; rule in areas outside of one’s competency; or misunderstand the meaning of idioms or ideas, because they draw external gemaras and rabbinic literature, so that the actual known words mean something else. Indeed, I’ve conducted research into how ChatGPT misunderstands Chazalic Hebrew.

LLMs Don’t “Know” Anything

While Large Language Models (LLMs) like ChatGPT appear to know billions of facts, based on their internal architecture, they really know nothing. For example, what actor played Harry Potter in the films? You know it’s Daniel Radcliffe, and a database might store that information. ChatGPT can answer it, but that’s because it is a predictive text algorithm, and given a context of words (or tokens), will repeatedly be able to tell you what words are likely to come next. It learned those next-token probabilities from massive human-produced data on the Internet. So, if you write “The actor who played Harry Potter”, then a continuation model will know that the next word will likely be either “is” or “was”. It could be other words, such as “ate” or “jumped”, but it will select a fairly frequent word, based on not just the current sentence but all the words in the preceding paragraphs. After thereby generating “The actor who played Harry Potter is”, the model can predict the next word as “Daniel”, then next word from that as “Radcliffe”, and finally, a period.

However, there is no “there” there. There’s no ghost in the machine, and the algorithm doesn’t really know who Harry Potter is, or that Daniel Radcliffe is an actor, in the same way that we do. Rather, it is what some refer to as a “stochastic parrot”, capable of imitating human speech based on probability without understanding its meaning. We can contemplate whether this level of generating words without knowing their meaning counts as gemir, from an accuracy or intelligence approach.

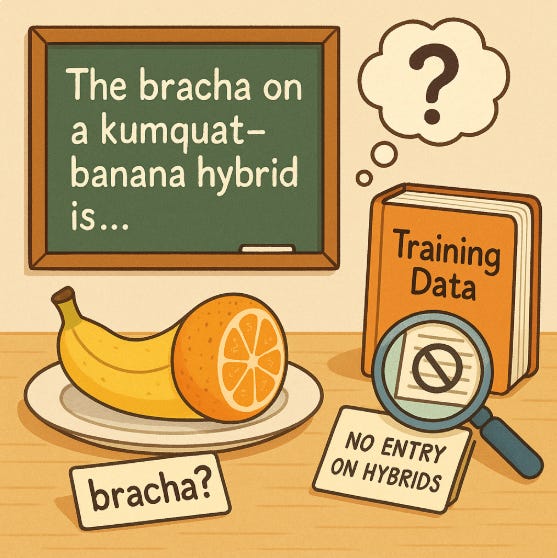

Relatedly, this algorithm often generates false facts, called “hallucinations”. Especially where the answer is outside of the LLM’s training data, but even where it has been trained, instead of answering “I don’t know”, the LLM will repeatedly generate the next probable word. “The bracha on a kumquat - banana hybrid is”, and then the training data has nothing about hybrids, but knows the next word possibilities include hamotzi, shehekol, ha’etz, and hamavdil, so it will select one of them! The same for fabricated Sages, or sefarim, or academic journals. Again, that speaks gemir, from an accuracy and intelligence approach.

Retrieval Augmented Generation

Many modern systems address this LLM deficiency by augmenting the core LLM architecture with search. If we are asking about who portrayed Harry Potter, the system performs a Google search for web pages about the film, and passes along the web pages’ text along with the question. If so, the LLM isn’t relying solely on its internal probabilities, and predicts the next words using that text as context.

This grounds LLM in actual facts. If the answer is in the context, this technique drastically reduces hallucinations. Common approaches to Retrieval Augmented Generation include Internet search; retrieving documents from a vector database whose vector (a sequence of numbers capturing some essential features) closely match the vector of the question; or exploring a knowledge graph.

While the LLM now produces more meaningful results, it never really thinks, and doesn’t know any of its response. Hallucinations are possible. Also, who says that all relevant documents are returned? Experts in the field of Information Retrieval talk not of accuracy but precision (percent of the documents returned that are relevant to the query) and recall (percentage of all relevant documents that were returned). If based on incomplete or irrelevant context, is the LLM gemir?

Rav Dicta

When attending the recent World Congress of Jewish Studies, I heard a presentation from Dr. Moshe Koppel about Rav Dicta (rav.dicta.org.il), which is an AI posek. He explained how researchers at Dicta have essentially eliminated hallucinations, to give accurate responses. They accomplish this with a series of prompts, rather than just returning the LLM’s response directly.

First, they ask Claude (an LLM) what sources are relevant to answering the halachic query. Next, they filter those Claude-provided sources. Dicta privately possesses a massive digitized Jewish library, so they can filter the sources to remove the half that don’t exist, because they were hallucinations. Next, they provide the full text of each cited source to Claude and ask if it is indeed relevant to the query, and filter out the many that are not. Finally, they provide the actual text of the relevant sources as context, together with the initial query, and instruct Claude to answer only based on those sources.

This form of RAG might be termed gemir; they consult a massive Jewish library and utilize the relevant documents. Conversely, perhaps Claude does not know all of the relevant sources. (Earlier, they had Claude suggest relevant search terms, which they use to search their library, so perhaps that provides a broader scope. Plus, they’re likely employing sophisticated strategies not publicly shared.)

Global vs. Local Knowledge

Finally, I’m not sure whether the localized knowledge provided by RAG suffices for gemir requirements. In that same forty year old shiur, at the 27:55 mark, Rav Schachter says, after discussing levels of pesak, he discusses the global vs. localized knowledge, beginning with “Most people are not qualified; most people takka don’t know that much. You need to know a lot to pasken a shayla.”

He recounts that after learning through the gemara in Yevamot about pesulei kahal and petzua daka, together with a wide array of rabbinic literature on the sugya, he and his friends thought themselves big k’nockers. They decided to test their chops by taking a petzua daka question in Igros Moshe, devising a responsum, and comparing it with Rav Moshe Feinstein’s answer. Rav Moshe came to a completely different conclusion! Instead of beginning with the sugya in Yevamot, Rav Moshe quoted a gemara in Bechorot they’d never heard of, which nobody on the daf quoted, and whose content wouldn’t have initially struck them as relevant. Similarly, someone who knows masechet Shabbat comprehensively might not be able to pasken, because there are some laws of keli sheini not found in masechet Shabbat.

Now, ChatGPT with RAG might actually do a better job fetching all relevant sugyot, not localized to a single masechet. Still, the required gemir level might vary depending on pesak difficulty and type. The sugya in Bechorot might not address petzua daka explicitly. Rather, Rav Moshe was sevir, understood the reasoning, and the underlying principle being established, and how it would impact the obvious sugyot in Yevamot. Put another way, what’s required is not a pipeline – first gemir, to retrieve selected sources and then sevir, to reason within them. Rather, one needs to globally know and understand the entirety of sources on a conceptual level, and draw on those as appropriate. I don’t think that that is how LLMs presently know and fetch relevant texts.