As part of my NLP course, students read and present NLP papers. Several years ago, a student presented this one: Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings.

The authors demonstrate that word2vec embedding vectors have sexist embeddings, which makes sense since it is based on data from the real world, and the real world is sexist. They address the problem by fabricating a new dataset which is more gender-balanced. This is a pretty prominent paper.

However, I think that their prime example does not stand up to close scrutiny, and I will lay out the ways here.

One very cool aspect of word2vec embedding vectors is that you can perform vector arithmetic on them. So, for instance, if you take the vector for king, subtract the vector for man, and add the vector for woman, the vector you get will be most similar to the vector for queen. (Henceforth, let us omit the words “the vector for” for the sake of brevity.) Similarly, Beijing - China + England ~= London.

The idea behind this is that, if we plot these 300 dimensional vectors, the distance and direction of [man] and [woman] are the same as for [king] and [queen], so taking the difference between [man] and [woman] and adding it to the king vector will get us to approximately the same spot.

Here is the idea king, queen, man, woman, though imagined in 2 dimensions.

and here are countries and their capitols:

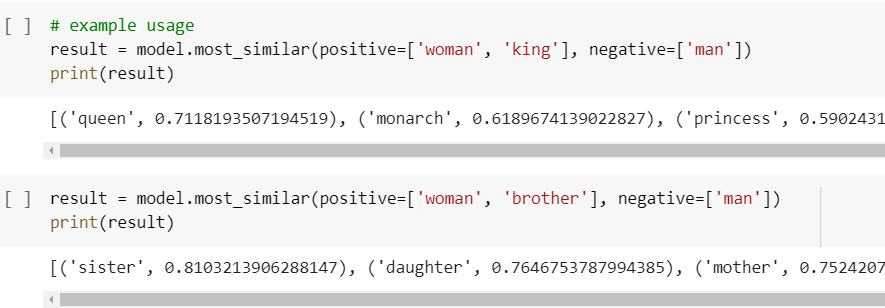

This then allows us to perform analogies. man is to woman as king is to X. Solve for X. Here is the example from my colab, with queen as the top result:

And, in their paper, they give the following example, leading to their title:

Another example they provide is father is to doctor as mother is to nurse. And they write, they write:

The primary embedding studied in this paper is the popular publicly-available word2vec [24, 25] embedding trained on a corpus of Google News texts consisting of 3 million English words and terms into 300 dimensions, which we refer to here as the w2vNEWS. One might have hoped that the Google News embedding would exhibit little gender bias because many of its authors are professional journalists. We also analyze other publicly available embeddings trained via other algorithms and find similar biases.

They discuss matters with greater nuance later in the paper, so perhaps my excerpts don’t due them full justice. Read it yourself. But given this staked-out position, here are a couple of reasons I believe it may not truly withstand scrutiny.

1. Comparing Bigrams to Unigrams

Their example, “computer programmer”, consists of a sequence, a pair of words, while “woman”, “man”, and “homemaker” are a single word. Why didn’t they just use the word “programmer”? Let us explore these two word vectors, observe some properties, and see how this may well account for the result of “homemaker”.

If we look at the most similar vectors to king, this is what we get:

So, a bunch of monarchy related words. The closest is the plural, but ignoring that, queen was already pretty close, and monarch as the gender-neutral is next.

OK, what about programmer?

The plural programmers, the capitalized form, coder as a synonym, a misspelling, then the bigram computer_programmer — we use underscore to capture word pairs. Jon Shiring is a male programmer who worked on the popular game Call of Duty. Then, other computer-related jobs. The further down we go, the less related.

Next, let us examine computer_programmer and its most similar vectors:

OK, this is just weird. Coders isn’t closest, or programmer. Rather, this is most closely related to other jobs in technical fields, which also happen to be bigrams. Thus, mechanical engineer and electrical engineer. Finally, programmers appears, but the other spellings and synonyms don’t rank. After programmer is another bigram, graphic designer. Finally, as we keep going down the list, we encounter “schoolteacher” — a role often played in our society by women, and then the titular “homemaker”. Erica Sadun is a female computer programmer based in Denver.

So, it is quite possible that the reason that they end up on homemaker is because the original vector was already quite close to homemaker.

Let us now try the vector arithmetic, but on both programmer and computer_programmer:

For “computer_programmer”, homemaker and graphic_designer, and schoolteacher, all already ranked on the top list are moved up. Yes, there is housewife and businesswoman, but more about that aspect in the next segment.

Meanwhile, for the unigram “programmer”, their analogy did not seem to budge it at all. The closest items are the very same closest items. It is as gender neutral as one can expect!

Shifting Gender-Neutral Terms Towards Female

In what seems to be an error in logical reasoning, king - man + woman is not at all akin to computer_programmer - man + woman. Why not? Because a king is almost definitionally masculine while queen is almost definitionally feminine.

(They partly note this phenomenon in the paper, page 3, when they discuss gender neutral words vs. gender specific words, and what one may expect for each, and the process of debiasing. I think I am saying something different.)

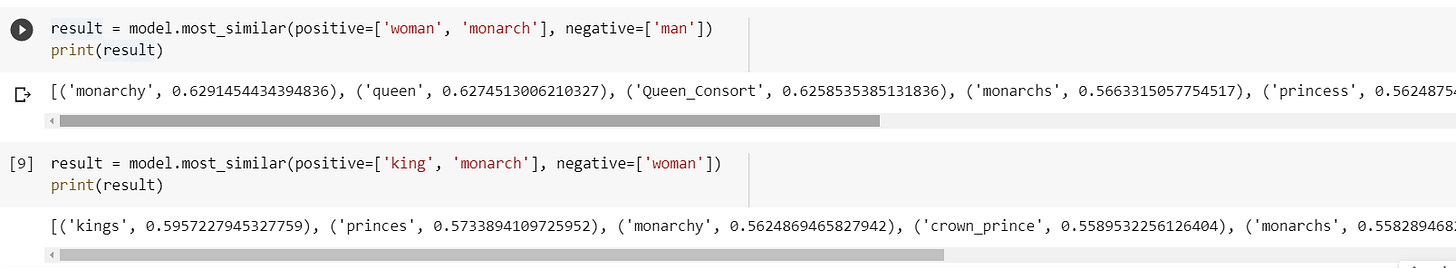

If you take what is already a gender-neutral word and both subtract man and add woman, then you are shifting it greatly towards female oriented space. So, the parallel would really be monarch, not king or queen. What happens if we shift “monarch” towards male or female in this manner?

OK, that works, in both directions, giving us the female version.

What about computer_programmer? While king and queen are gendered terms, there is no gendered term for computer programmer. So of course it is going to shift it in a female space. So let us try computer programmer in a male direction.

We are still getting bigrams at the top, but these aren’t as gendered as the female professions. We lose coders, but retain programmer.

If we look at just programmer, as discussed in section 1, the shift doesn’t happen. We are as gender neutral as before.

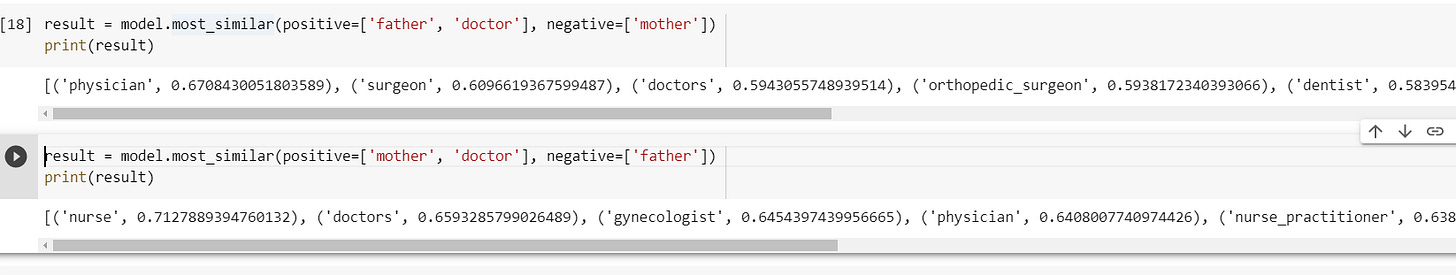

But what about father is to doctor as mother is to X, which returns nurse? They may have played with this example, for why not retain man and woman?

First off, here are the most similar word vectors without any gender shift:

We have physician, the plural doctors, a gynecologist who treats women, a surgeon who treats men and woman, a dentist, and so on. A nurse, a role often filled in the US by women, appears on the list.

So when we shift, using man and woman?

Again, we see a slight shift, in that gynecologist drops down on the list. But this may be either the gender of the doctor or of the patient. Shifting in the direction of woman, we end up with gynecologist as top with 0.71 similarly, and nurse perhaps meaningfully below it, with 0.65 similarity.

Only with father and mother, rather than man and woman, do we get their top result, which is also insulting because nurses get paid less and are less respected than doctors.

We should also note that a mother will often nurse her infant, so shifting a gender neutral word towards mother might nudge nurse just a bit higher, to the top result. Doctors, the plural, still comes out ahead of gynecologist in this scenario.